Watch Tim Barfoot’s keynote on vision-based navigation at the 2021 International Conference on Intelligent Robots and Systems (IROS).

Abstract

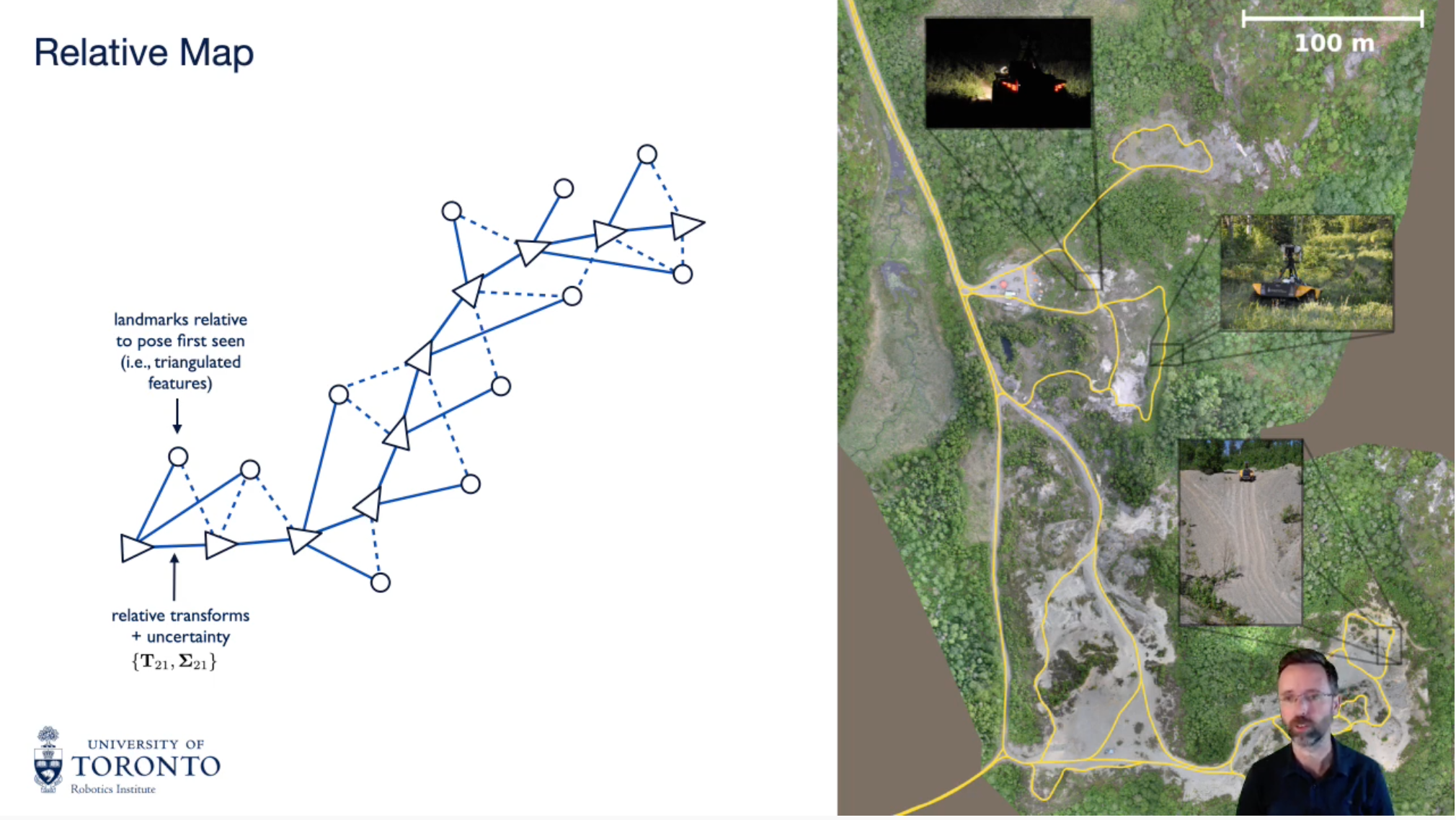

Many applications of mobile robots (e.g., transportation, delivery, monitoring, guiding) require the ability to operate over long periods of time in the same environment. Over the lifetime of a robot, many factors from lighting and weather to seasonal change and construction influence the way sensors ‘see’ that environment. Cameras are perhaps the most challenging sensor to work with in this regard due to their passive nature; however, they are also the cheapest, lowest power, and highest resolution sensors we currently have. I will discuss our lab’s long-term effort to build a practical, lightweight, long-term navigation system for mobile robots based on cameras. If our robots have ‘seen further’ it is surely by standing on the shoulders of giants and so I will reflect on the key technological ingredients from the literature that we have incorporated into our navigation system including matchable features in the front end and relative, multi-experience map representations and robust estimation in the back end. The answer to the question posed in the title hinges on the ability to ‘match’ new images to those previously captured and so I will discuss how we have been using deep learning to automatically figure out what features to ‘keep an eye on’ for long-term localization, with some promising results. I will also use this talk to formally announce the open sourcing of our Visual Teach and Repeat 3 (VT∓R3) navigation framework for the first time, so you can try it out for yourself!

d

Biography

Prof. Timothy Barfoot (University of Toronto Robotics Institute) works in the area of autonomy for mobile robots targeting a variety of applications. He is interested in developing methods (localization, mapping, planning, control) to allow robots to operate over long periods of time in large-scale, unstructured, three-dimensional environments, using rich onboard sensing (e.g., cameras, laser, radar) and computation. Tim holds a BASc (Aerospace Major) from the UofT Engineering Science program and a PhD from UofT in robotics. He took up his academic position in May 2007, after spending four years at MDA Robotics (builder of the well-known Canadarm space manipulators), where he developed autonomous vehicle navigation technologies for both planetary rovers and terrestrial applications such as underground mining. He was also a Visiting Professor at the University of Oxford in 2013 and recently completed a leave as Director of Autonomous Systems at Apple in California in 2017-9. Tim is an IEEE Fellow, held a Canada Research Chair (Tier 2), was an Early Researcher Awardee in the Province of Ontario, and has received two paper awards at the IEEE International Conference on Robotics and Automation (ICRA 2010, 2021). He is currently the Associate Director of the UofT Robotics Institute, Faculty Affiliate of the Vector Institute, and co-Faculty Advisor of UofT’s self-driving car team that won the SAE Autodrive competition four years in a row. He sits on the Editorial Boards of the International Journal of Robotics Research (IJRR) and Field Robotics (FR), the Foundation Board of Robotics: Science and Systems (RSS), and served as the General Chair of Field and Service Robotics (FSR) 2015, which was held in Toronto. He is the author of a book, State Estimation for Robotics (2017), which is free to download from his webpage (http://asrl.utias.utoronto.ca/~tdb).